WebAssembly (Wasm) has been the subject of buzz and bold predictions for years. Touted as the future of high-performance web apps and cross-platform development, it promised near-native speed, multi-language support, and sandboxed execution — all within the browser (and beyond).

Now in 2025, the question is no longer what can Wasm do? but rather: is anyone really using it — and where?

Spoiler: Yes. But the picture is nuanced.

🔧 What Is WebAssembly Again?

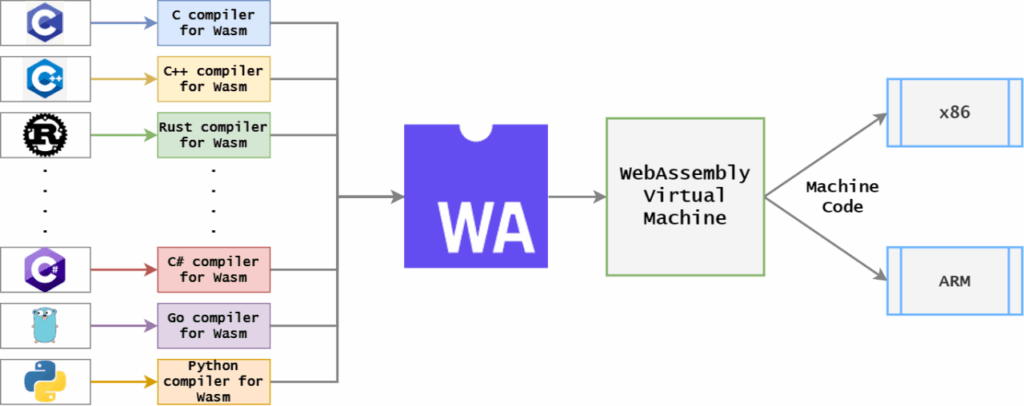

For a quick refresher, WebAssembly is a low-level, binary instruction format designed to be a safe, fast, and portable compilation target for languages like C, C++, Rust, Go, and more. It allows code to run in environments like web browsers with near-native speed.

In recent years, it’s expanded far beyond the browser — into serverless platforms, edge computing, and embedded systems.

✅ Where WebAssembly Is Gaining Real Adoption in 2025

1. Edge Computing & Serverless Platforms

This is perhaps the biggest success story of Wasm in 2025. Frameworks and platforms are increasingly using WebAssembly for secure, lightweight compute on the edge.

Real-World Use Cases:

- Cloudflare Workers, Fastly’s Compute@Edge, and Vercel Edge Functions run Wasm modules to execute code closer to users with minimal cold-start time.

- Fermyon Spin and Suborbital are gaining traction as developer-friendly platforms for building portable serverless applications with Wasm.

- Wasm’s sandboxing and tiny footprint make it ideal for multi-tenant environments and plugin systems where isolation is crucial.

The promise of deploying logic across dozens of edge locations — with strong security guarantees and low latency — is where Wasm shines brightest.

2. Browser-Based Apps with Heavy Lifting

While React and JavaScript still dominate everyday web UIs, Wasm is powering performance-intensive tasks that would otherwise lag or crash the browser.

Real-World Use Cases:

- Design tools (e.g., Figma), CAD apps, and video editors are increasingly offloading complex rendering and data transformations to Wasm.

- Libraries like Tesseract.js (OCR), FFmpeg.wasm (video), and Blazor WebAssembly (C# in the browser) make previously impossible apps feasible.

- In gaming, Unity, Godot, and Unreal Engine now support Wasm exports for browser-based games with near-native performance.

It’s not replacing JavaScript — but it’s enabling new classes of apps alongside it.

3. Cross-Platform Runtime Environments

WebAssembly is turning into a universal runtime — like Java once aimed to be — but lighter and faster.

Examples:

- WasmEdge, Wasmtime, and Wasmer are powering CLI tools and services that run Wasm apps outside the browser — even on tiny devices.

- Languages like Rust, Go, and TinyGo compile to Wasm for use in portable backends and IoT systems.

- Docker now supports running Wasm workloads natively alongside containers with projects like containerd-shim-wasm.

This makes it easy to write a module once and run it anywhere — cloud, CLI, edge, or embedded.

🛠️ Developer Ecosystem: Still Evolving

While Wasm has made great technical strides, developer tooling and ecosystem maturity are still catching up.

Pros:

- Languages like Rust, C/C++, and TinyGo have solid Wasm targets.

- Component model 1.0 (finalized in late 2024) simplifies module interoperability and dependency management.

- Package managers (like wasm-pkg, WAPM) and bundlers now offer smoother developer experiences.

Challenges:

- Debugging Wasm is still clunky, especially in the browser.

- Multi-language support beyond Rust/C is improving, but still uneven.

- Tooling for full-stack Wasm apps (frontend + backend) is fragmented compared to established ecosystems like Node.js or Python.

In short: it’s getting better, but it’s still not “click-and-play” unless you’re already comfortable with systems languages.

🧩 Wasm + AI: A New Frontier

In 2025, Wasm is playing a growing role in AI model deployment at the edge.

How?

- You can run quantized machine learning models inside Wasm environments with frameworks like ONNX.js and TF.js + Wasm backend.

- AI inference is being shipped directly into the browser for low-latency experiences (e.g., summarizing text, transcribing speech, personalizing UIs).

- On the server side, Wasm is being used to run AI plugins safely inside agents or sandboxed apps — especially in open-source AI assistant frameworks.

This blend of performance, portability, and safety makes Wasm a strong candidate for local-first AI applications.

🤔 Why Isn’t Wasm Everywhere Yet?

Despite real traction, WebAssembly is not replacing your entire tech stack — and probably never will. There are still limits:

- No DOM access: In the browser, Wasm modules still rely on JS for DOM interaction, which adds complexity.

- Startup latency: While improving, Wasm modules still have overhead compared to native code in some environments.

- Maturity: Compared to mature frameworks like Node.js, Django, or Spring, Wasm’s full-stack ecosystem is still relatively young.

- Developer familiarity: Most developers are still more comfortable with high-level languages and frameworks, and Wasm often requires learning Rust, Go, or C++.

In essence, Wasm is supplementary — not a silver bullet.

🔮 Looking Ahead: WebAssembly in the Next Few Years

With the finalization of the Wasm Component Model and growing support for WASI (WebAssembly System Interface), the next wave of Wasm adoption could be even bigger.

Here’s what’s on the horizon:

- Wasm in container orchestration: Kubernetes-native Wasm pods.

- More language targets: Swift, Kotlin, Zig, and others.

- Full-stack Wasm frameworks that rival Next.js or Django.

- Enterprise plugin systems that embed Wasm safely (think Adobe, Autodesk, and Unity).

💡 Final Thoughts

WebAssembly in 2025 is no longer just a promising idea — it’s a working solution in edge compute, browser-based power apps, cross-platform runtimes, and even AI workloads.

But it’s not a wholesale replacement for your current stack. Instead, think of Wasm as a new capability — one that adds performance, security, and portability to the right parts of your system.

If you haven’t explored it yet, now’s a great time to experiment. Start with a Rust or Go module, try deploying to an edge function, or port a CPU-intensive browser feature. You might find WebAssembly becoming a quiet — but powerful — part of your stack.